Struggling to keep your website updated and SEO-friendly with lots of changes? It can be a daunting task to manually inform search engines about every change on your website, especially with its extensive content.

How can you make sure that updating your content often helps your website rank better in search results? It’s a real challenge to keep everything updated and make sure search engines notice.

The answer is using crawler bots. These bots go through your website, find new updates, and help improve your SEO. In this blog, we’ve put together a web crawlers list that will make your job easier and smoother.

Let’s get started and make your website shine!

What Are Web Crawlers?

Web crawlers, web spiders, or web robots are computer programs that systematically browse the internet to gather information from websites.

They start at one web page and follow links to discover and index other pages. This process helps search engines find and organize information from various websites.

Googlebot is a well-known example of a web crawler used by search engines to automate browsing and build an index of web content.

How Do Web Crawlers Work?

A web crawler starts with a list of web addresses called seeds. It visits these addresses to look at the web pages, reads their content, and looks for links to other web addresses on these pages.

The crawler then adds these new addresses to its list and repeats the process. As it goes, it stores information from the pages it visits in a database.

It follows rules to avoid overloading websites and skips certain pages if instructed. This process allows the crawler to collect and update information about various web pages.

Types of Web Crawlers

- General web crawlers: These crawlers are designed to index and retrieve information from a wide range of websites and are used by search engines to build their databases.

- Focused web crawlers: These crawlers are designed to target specific types of content or websites, such as news articles, academic papers, or social media profiles.

- Incremental web crawlers: These crawlers are designed to periodically revisit websites to update their index with new or updated content.

- Deep web crawlers: These crawlers are designed to access and index content that is not easily accessible through traditional search engines, such as dynamic web pages, databases, and password-protected sites.

- Vertical web crawlers: These crawlers are designed to focus on a specific industry or topic, such as e-commerce, finance, or healthcare, to provide more targeted search results for users.

Best Web Crawlers List

Here is the list of web crawlers, let’s learn about each in depth.

1. Google Bot

Google Bot is a web crawling bot used by Google to discover and index web pages on the internet.

It is responsible for scanning and analyzing websites to determine their content, relevance, and ranking in search results.

Key Features of Google Bot

- Crawling: Google Bot systematically scans and visits web pages to gather information and index them in Google’s search database.

- Indexing: It stores and organizes the information collected from web pages to make it easily accessible for search queries.

- Rendering: Google Bot can render web pages to understand their content, including text, images, and multimedia.

- Speed and efficiency: Google Bot continuously works to update and improve the search index, ensuring that users receive the most relevant and up-to-date search results.

2. Bing Bot

Bing Bot is a web crawling bot used by Microsoft’s search engine, Bing, to discover and index web pages on the internet.

It works similarly to Google Bot, scanning websites to gather information and make them available in Bing’s search results.

Key Features of Bing Bot

- Crawling: Bing Bot systematically visits web pages to gather information and index them in Bing’s search database.

- Indexing: It stores and organizes the information collected from web pages to make it easily accessible for search queries.

- Rendering: Bing Bot can render web pages to understand their content, including text, images, and multimedia.

- Mobile-friendliness: It prioritizes mobile-friendly web pages for indexing and ranking in search results.

3. Duckduck Bot

DuckDuckGo Bot is a web crawling bot used by the DuckDuckGo search engine to find and index web pages on the internet. It works by visiting websites and gathering information to include in DuckDuckGo’s search results.

Key Features of DuckDuckGo Bot

- Crawling: The bot systematically visits web pages to collect information and index them in DuckDuckGo’s search database.

- Privacy: DuckDuckGo Bot respects user privacy and does not track or store personal information about users’ search activities.

- Instant Answers: DuckDuckGo Bot provides instant answers to search queries, displaying relevant information directly on the search results page.

- Zero-click Info: DuckDuckGo Bot offers “zero-click” info, providing direct answers to search queries without the need to click on any search results.

4. Yandex Bot

Yandex Bot is a web crawling bot used by the Yandex search engine to find and index web pages on the internet. It works by visiting websites and gathering information to include in Yandex’s search results.

Key Features of Yandex Bot

- Indexing: It stores and organizes the information collected from web pages to make it easily accessible for search queries.

- Language support: Yandex Bot supports multiple languages, allowing it to index and display search results in various languages.

- Image search: It can also crawl and index images on the web, making them searchable through Yandex’s image search feature.

- Geographical relevance: Yandex Bot can prioritize search results based on geographical location, providing relevant local search results to users.

Need help with your WordPress website maintenance? Here’s the blog WordPress Website Maintenance Checklist

5. Apple Bot

Another one on the Web Crawlers List is Applebot, it was introduced by Apple back in 2015. This is the web crawler used by Apple, the company behind the Safari web browser.

Like all we discussed before, This also crawls the web to find new web pages and updates existing ones and is designed to work well with Apple’s ecosystem of products and services.

Key Features of Apple Bot

- Applebot’s Integration with Siri and Spotlight: AppleBot is utilized by various Apple products, including Siri and Spotlight Suggestions, according to the company.

- Factors Influencing Applebot’s Search Indexing: Applebot uses some of the criteria to index search results based on user engagement, relevance of content, quality of external links, user location signals, and webpage design characteristics. These factors influence how search results are displayed to users.

- Rendering Capabilities: Applebot can render JavaScript and CSS, meaning it can process dynamically loaded content on websites, unlike some older search engine crawlers. This ensures that Applebot “sees” the web page as a user would.

- Functional Similarities to Googlebot: Applebot works like Google’s Googlebot and can understand Googlebot instructions if there are not any specific instructions for Applebot.

6. Swift Bot

Swift Bot is the web crawler used by the Swift search engine, which is designed to be fast and efficient. It crawls the web to find new web pages and updates existing ones to make them searchable on SERPs and is used by several smaller search engines.

Key Features of Swift Bot

- Customized Website Indexing with Swiftype: Swiftype is especially useful for websites with many pages, offering a user-friendly interface to effectively catalog and index all pages.

- Custom Crawl: Generally most web crawlers automatically crawl all websites to build a comprehensive search index of the web, But unlike all, Swiftbot only crawls websites that the customers specifically request to crawl.

- Easy Website Indexing with Swiftype: Swiftbot offers a simple and straightforward way to index your website’s content for search. Compared to using the Swiftype API, using the web crawler requires less technical expertise and allows you to set up site searches more quickly and easily.

- Website Crawling Similarities to Google: Swiftype’s crawler collects and indexes data from your website like how Google crawls data for its global search engine.

7. Slurp Bot

Slurp Bot is the web crawling bot used by the Yahoo! search engine to find and index web pages on the internet.

It works by visiting websites and gathering information to include in Yahoo!’s search results.

Key Features of Slurp Bot

- Crawling: The bot systematically visits web pages to collect information and index them in Yahoo!’s search database.

- Indexing: It stores and organizes the information collected from web pages to make it easily accessible for search queries.

- Freshness: Slurp Bot continuously updates and improves the search index to provide users with the most relevant and up-to-date search results.

- Multimedia support: Slurp Bot can crawl and index various types of media, including images and videos, to include in Yahoo!’s search results.

Looking for ways to protect your website from hackers? Check out the 5 Best WordPress Security Plugins to Protect Your Site

8. Baidu Spider

Baidu Spider is the web crawler used by Baidu, the most popular search engine in China. It crawls the web to find new web pages and is designed to work well with the Chinese language and character set.

Key Features of Baidu Spider

- Automatic Site Scanning and Crawling: The Baidu spider automatically scans your site for new updates. If this affects your site’s performance, you can adjust the crawling rate in your Baidu Webmaster Tools account.

- Managing Crawling Rate with Baidu Webmaster Tools: Baidu offers its own version of the Search Console called Baidu Webmaster Tools or Baidu Ziyuan. With a webmaster account, you can analyze and detect crawling issues, as well as view the HTML content that has been crawled by the Baidu bot.

- Analyzing Crawling Issues with Baidu Webmaster Tools: If you don’t want to let Baidu Spider’s activity on your site, look for user agents such as Baidu spider, Baidu spider-image, Baidu spider-video, and similar identifiers. If any of these user agents are found on your site, it means the Baidu spider is crawling your site.

- Identifying Baidu Spider Activity on Your Site: Baidu is one of the top search engines in the world and it holds an 80% market share in mainland China’s search engine market.

9. Facebook External Hit

Facebook External Hit is not a web crawling bot like Google Bot or Bing Bot. It is a term that appears in server logs when Facebook’s servers access content from a website.

This happens when someone shares a link from that website on Facebook, and Facebook needs to access the site to gather information like the title, description, and image to display in the shared post.

Key Features of Facebook External Hit

- Content gathering: When a link from a website is shared on Facebook, the External Hit server accesses the site to collect information for the shared post, such as the title, description, and image.

- Post preview: The information gathered from the website is used to create a preview of the shared link on Facebook, allowing users to see a snippet of the content before clicking on the link.

- Analyzing Shared Web Content: The Facebook Crawler analyzes the HTML of a website or app that is shared on Facebook.

- Facebook’s Advertising-Enhancing Crawler: One of Facebook’s main crawling bots is Facebot, which helps improve advertising performance.

10. SEMrushBot

SEMrushBot is a web crawling bot used by the SEMrush platform to collect data from websites. It visits web pages to gather information that is used for analyzing a site’s performance in search engines and providing insights for digital marketing strategies.

Key Features of SEMrushBot

- Data collection: It systematically visits web pages to gather information such as keywords, backlinks, and rankings to provide insights for search engine optimization and digital marketing efforts.

- Website analysis: SEMrushBot collects data to analyze a website’s performance, including its visibility in search results, traffic, and potential areas for improvement.

- Competitor research: It gathers data on competitors’ websites to help users understand their strategies and performance in search engines.

- Backlink analysis: It gathers data on backlinks pointing to a website, which helps users understand their link profile and identify opportunities for improving their backlink strategy.

Stay updated with Helpful WordPress Tips, Insider Insights, and Exclusive Updates – Subscribe now to keep up with Everything Happening on WordPress!

Wrapping Up

Web crawlers are important for sorting and organizing internet content. The 10 most common bots and spiders in 2025, such as Google Bot and Bing Bot, are crucial for finding and categorizing web pages, which affects the search results people see.

Knowing about these web crawlers and how they work can help website owners and marketers improve their online presence.

As technology advances, these web crawlers will likely become even better at navigating and understanding the web.

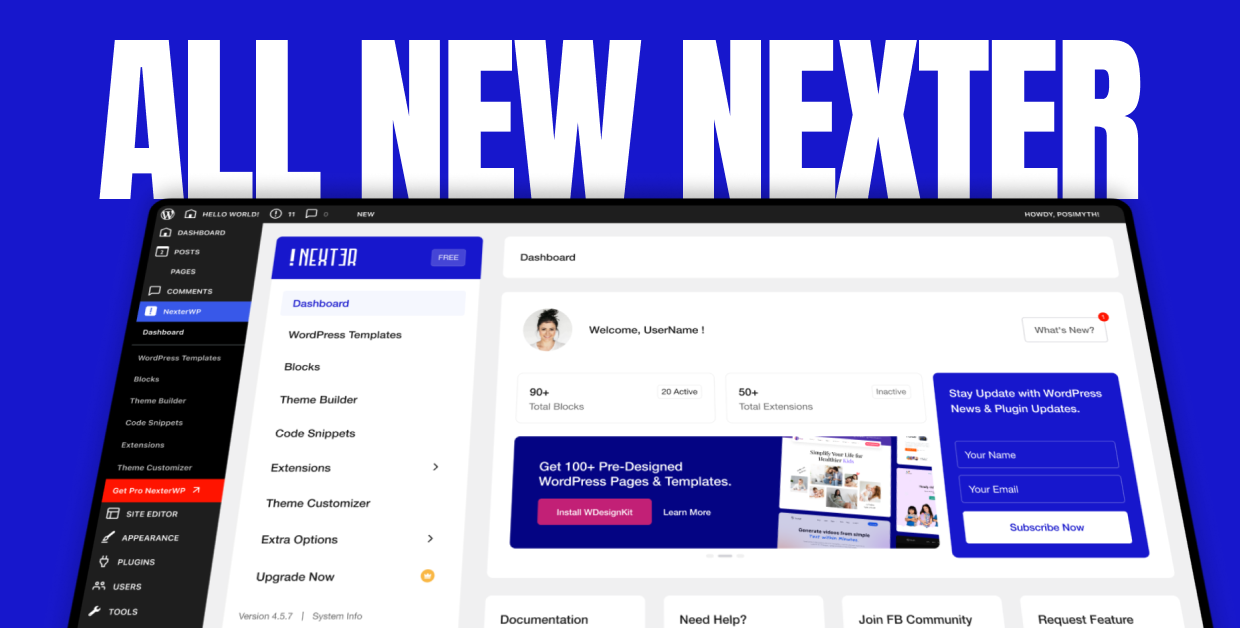

Moreover, if you’re using the default Gutenberg editor to build your WordPress site, we would recommend you to check out Nexter Blocks for Gutenberg, this all-in-one plugin offers 90+ unique Gutenberg blocks that will help enhance the functionality of your default WordPress editor.

Further Read:How about highly securing your website content from malicious attacks and cyber threats? Here’s the blog about the 5 Best WordPress Content Protection Plugins.

FAQs on Web Crawlers List

How do I crawl all the pages of a website?

To crawl all pages of a website, you can use a web crawler tool like Screaming Frog SEO Spider or Sitebulb. These tools allow you to input the website’s URL and start a crawl, which will systematically scan and index all accessible pages on the site, providing detailed information on each page’s content, structure, and links.

How do I identify a web crawler?

Web crawlers can be identified by their User-Agent string, which is included in the HTTP header of their requests. Most web crawlers will include a reference to their name or company in this string. For example, Googlebot is the web crawler used by Google, and its User-Agent string includes the word “Googlebot”.

How many types of crawlers are there?

There are several types of web crawlers, including general-purpose crawlers, focused crawlers, incremental crawlers, and deep web crawlers. Each type of crawler has its specific purpose and is designed to crawl certain types of websites or content.

Which web crawlers are widely used today?

Googlebot is the most widely used web crawler today, followed by Bingbot and Yahoo! Slurp. Other popular web crawlers in the list could include Baidu Spider, Yandex Bot, and Sogou Spider.

How do you tell web crawlers which part of the sites to not index?

To prevent parts of your website from being indexed by web crawlers, use a robots.txt file in your site’s root directory. This file tells search engine bots which directories or pages to skip. You can also use HTML meta tags like `<meta name=”robots” content=”noindex”>` on specific pages to stop indexing of certain content.

How can I protect my website from malicious crawlers?

To keep your website safe from harmful crawlers, you can take some steps like using tools like robots.txt, CAPTCHA, and rate limiting. Also, monitor user-agent strings, block suspicious IP addresses, analyze traffic behavior, conduct regular security checks, and consider using a web application firewall (WAF).